Written by Gerald Frilot. Published by Tony Harper.

AWS CloudWatch is a unified monitoring service for AWS services and your cloud applications. Using AWS CloudWatch, you can:

- monitor your AWS account and resources

- generate a stream of events

- trigger alarms and actions for specific conditions

- manually export CloudWatch log groups to an Amazon S3 bucket

Exporting data to an S3 bucket is an important process if your organization needs to report on CloudWatch data for a period greater than the specified retention time. After the retention time expires, log groups are permanently deleted. In this case, manual exports alleviate risks associated with data loss but one major disadvantage of manually exporting logs, as defined in AWS Docs, is that each AWS account can only support one export task at a time. This operation is feasible if you only have a few log groups to export but can become very time consuming and prone to errors if you need to manually export more than 100 log groups periodically.

Let’s use a step-by-step solution to automate the process of exporting larger log groups to an S3 bucket using a Lambda instance to direct CloudWatch event-based traffic. You can use an existing S3 bucket or create a new S3 instance.

Amazon Simple Storage Service (S3)

Log into your AWS account, search for the Amazon S3 service, and follow these steps to enable the simple storage service:

- Select a meaningful name

- Select an AWS Region

- Keep all defaults

- ACLs disabled (Recommended)

- Block all public access (Disabled)

- Bucket Versioning (Disable)

- Default encryption (Disable)

- Select Create Bucket (This creates a new S3 instance for data storage)

Once the bucket is created, you will need to navigate to the Permissions Tab:

Update the Bucket Policy that allows CloudWatch to store objects to the S3 bucket. Use the following to complete this process:

| { | |

| “Version”: “2012-10-17”, | |

| “Statement”: [ | |

| { | |

| “Effect”: “Allow”, | |

| “Principal”: { | |

| “Service”: “logs.YOUR-REGION.amazonaws.com” | |

| }, | |

| “Action”: “s3:GetBucketAcl”, | |

| “Resource”: “arn:aws:s3:::BUCKET_NAME_HERE” | |

| }, | |

| { | |

| “Effect”: “Allow”, | |

| “Principal”: { | |

| “Service”: “logs.YOUR-REGION.amazonaws.com” | |

| }, | |

| “Action”: “s3:PutObject”, | |

| “Resource”: “arn:aws:s3:::BUCKET_NAME_HERE/*”, | |

| “Condition”: { | |

| “StringEquals”: { | |

| “s3:x-amz-acl”: “bucket-owner-full-control” | |

| } | |

| } | |

| } | |

| ] | |

| } |

AWS Lambda

The S3 bucket is now configured to allow object write-through from our CloudWatch service. Our next step is to create a Lambda instance that houses the source code for receiving CloudWatch events and storing them to our S3 instance.

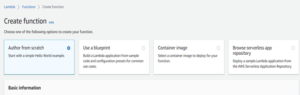

Search for the Lambda service in your AWS account, navigate to functions, and select Create Function.

![]()

Follow these steps:

- Select the Author from scratch template

- Under Basic Information, we need to provide:

- Function name

- Runtime (Python 3.9)

- Instruction set Architecture (x86_64 default)

- Keep the defaults under execution role and advanced settings dropdown, and select Create Function

Python Script (Pseudocode)

Python script imports the boto3 aws-sdk module for creating, configuring, and managing AWS services along with an os and time module. We instantiate a new instance of CloudWatch logs and a new instance of the AWS Systems Manager Parameter Store. Within the lambda handler method, we initialize an empty object and two empty arrays. The empty object may be useful if we only care to target a specific log group name prefix.

Our first array targets all log groups, and the second array is used to determine which log groups to export. We then check if the S3 bucket environment variable exists, if not we return an error. Otherwise, we enter a series of loops. The first loop will invoke the AWS DescribeLogGroups method and add them to our initial log groups array. Once all log groups are added, we begin our second loop that searches for the ExportToS3 tag in the initial log groups array. If this tag exists, we update the second array with log groups that need to be exported.

The final loop iterates over the second array and uses the log group name as a prefix for the Parameter Store search. If a match is found, we then check the time value stored and compare it to our current time. If 15 minutes have elapsed, we update the S3 bucket with our data and then update the Parameter Store value with the current time.

- Select Deploy to save our code changes and then navigate to the Configuration tab

- We now need to create an environment variable that references the S3 bucket where our CloudWatch events will be stored

Note: Key needs to be set to S3_BUCKET and the value set to the name of your S3 bucket. This is referenced in the lambda code and will need to be set prior to invoking this function.

- Our next course of action is to update the lambda’s basic execution role. This allows our lambda permission to perform read/update operations on separate AWS services. Use the following to complete the process:

{

“Version”: “2012-10-17”,

“Statement”: [

Avoid Contact Center Outages: Plan Your Upgrade to Amazon Connect

Learn the six most common pitfalls when upgrading your contact center, and how Amazon Connect can help you avoid them.

{

“Sid”: “VisualEditor0”,

“Effect”: “Allow”,

“Action”: [

“logs:ListTagsLogGroup”,

“logs:DescribeLogGroups”,

“logs:CreateLogGroup”,

“logs:CreateExportTask”,

“ssm:GetParameter”,

“ssm:PutParameter”

],

“Resource”: “arn:aws:logs:{your-region}:{ your aws account number}:*”

},

{

“Sid”: “VisualEditor1”,

“Effect”: “Allow”,

“Action”: [

“logs:ListTagsLogGroup”,

“logs:CreateLogStream”,

“logs:DescribeLogGroups”,

“logs:PutLogEvents”,

“logs:CreateExportTask”,

“ssm:GetParameter”,

“ssm:PutParameter”,

“s3:PutObject”,

“s3:PutObjectAcl”

],

“Resource”: “arn:aws:logs:{your region}:{your aws account number}:log-group:/aws/lambda/{ Function Name }:*”

},

{

“Sid”: “VisualEditor2”,

“Effect”: “Allow”,

“Action”: “ssm:DescribeParameters”,

“Resource”: “*”

},

{

“Sid”: “VisualEditor3”,

“Effect”: “Allow”,

“Action”: [

“ssm:GetParameter”,

“ssm:PutParameter”

],

“Resource”: “arn:aws:ssm:{ your region }:{aws account number}:parameter/log-exporter-*”

},

{

“Sid”: “VisualEditor4”,

“Effect”: “Allow”,

“Action”: [

“s3:PutObject”,

“s3:PutObjectAcl”,

“s3:GetObject”,

“s3:GetObjectAcl”,

“s3:DeleteObject”

],

“Resource”: [

“arn:aws:s3:::{aws bucket name}”,

“arn:aws:s3:::{aws bucket name}/*”

]

}

]

}

AWS Parameter Store

Now that the S3 bucket and the Lambda are completely set up, we can turn to the AWS service called Parameter Store which provides secure, hierarchical storage for configuration data management and secrets management. This service is for reference only as our lambda method takes care of the initial setup and naming conventions for this service. When a CloudWatch event is triggered, our code references Parameter Store to determine if 15 minutes have elapsed since we last stored data in our S3 bucket. The first invocation will set the parameter store value to 0 and then check/update that value with every recurring event on 15-minute boundaries. Data is never overwritten, and our initial setup runs flawlessly without any user intervention.

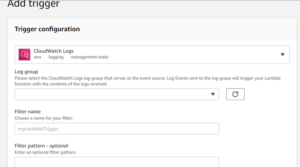

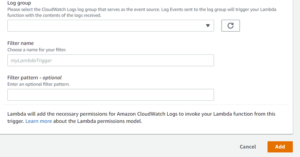

Lambda Triggers

We are going to redirect back to our Lambda instance and make one final update under the Configuration > Triggers tab

- Select Add trigger

- Fill in the following fields and then select Add

- CloudWatch Logs (click the caret to select the dropdown menu and select the right service)

- Log group

- Filter name

- Repeat steps 1 and 2 for each log group required for S3 storage.

Note: The previous step and the one following are executed in this order to avoid writing data to the S3 bucket for an active environment.

CloudWatch Tags

Our code will only export log groups that contain a tag and this operation can only be done from a terminal. Refer to AWS CLI to learn more about how to set up command line access (CLI) for your AWS environment. Once command-line access is complete, we can set up each log group needing export via the command line. Use the following command to complete this process:

aws –region us-west-2 logs tag-log-group –log-group-name /api/aws/connect –tags ExportToS3=true

We are now automatically set up to export CloudWatch log groups to our S3 bucket!

AWS Solution Delivered

We’ve chosen AWS Services because of its flexibility and ability to drive results to the market in a timely manner. By directing our attention to AWS Cloud, we were able to effectively export data to an S3 bucket driven by CloudWatch events.

Contact Us

At Perficient, we are an APN Advanced Consulting Partner for Amazon Connect which gives us a unique set of skills to accelerate your cloud, agent, and customer experience.

Perficient takes pride in our personal approach to the customer journey where we help enterprise clients transform and modernize their contact center and CRM experience with platforms like Amazon Connect. For more information on how Perficient can help you get the most out of Amazon Lex, please contact us here.

You have described everything so well but i dont find the code here. Could you please send the code to me.