The Data and AI Summit 2022 had enormous announcements for the Databricks Lakehouse platform. Among these, there were several exhilarating enhancements to Databricks Workflows, the fully managed orchestration service that is deeply integrated with the Databricks Lakehouse Platform and Delta Live tables too. With these new efficacies, Workflows enables data engineers, data scientists and analysts to build reliable data, analytics, and ML workflows on any cloud without needing to manage complex infrastructure.

Following are the 5 exciting and most important announcements for the same –

-

- Build Reliable Production Data and ML Pipelines with Git Support:

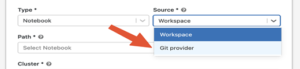

We use Git to version control all of our code. With Git support in Databricks Workflows, you can use a remote Git reference as the source for tasks that make up a Databricks Workflow. This eliminates the risk of accidental edits to production code, removes the overhead of maintaining a production copy of the code in Databricks and keeping it updated, and improves reproducibility as each job run is linked to a commit hash. Git support for Workflows is available in Public Preview and works with a wide range of Databricks supported Git providers including GitHub, Gitlab, Bitbucket, Azure DevOps and AWS CodeCommit.

- Orchestrate even more of the lake house with SQL tasks:

Real-world data and ML pipelines consist of many different types of tasks working together. With the addition of SQL task type in Jobs, you can now orchestrate even more of the lakehouse. For example, we can trigger a notebook to ingest data, run a Delta Live Table Pipeline to transform the data, and then use the SQL task type to schedule a query and refresh a dashboard.

- Save Time and Money on Data and ML Workflows With “Repair and Rerun”:

To support real-world data and machine learning use cases, organizations create sophisticated workflows with numerous tasks and dependencies, ranging from data ingestion and ETL to ML model training and serving. Each of these tasks must be completed in the correct order. However, when an important task in a workflow fails, it affects all downstream tasks. The new “Repair and Rerun” capability in Jobs addresses this issue by allowing you to run only failed tasks, saving you time and money.

- Easily share context between tasks:

A task may sometimes be dependent on the results of a task upstream. Previously, in order to access data from an upstream task, it was necessary to store it somewhere other than the context of the job, like a Delta table. The Task Values API now allows tasks to set values that can be retrieved by subsequent tasks. To facilitate debugging, the Jobs UI displays values specified by tasks.

- Delta Live Tables Announces New Capabilities and Performance Optimizations:

DLT announces it is developing Enzyme, a performance optimization purpose-built for ETL workloads, and launches several new capabilities including Enhanced Autoscaling. DLT enables analysts and data engineers to quickly create production-ready streaming or batch ETL pipelines in SQL and Python. DLT simplifies ETL development by allowing you to define your data processing pipeline declaratively. DLT comprehends your pipeline’s dependencies and automates nearly all operational complexities.

- UX improvements – Extended UI to make managing DLT pipelines easier, view errors, and provide access to team members with rich pipeline ACLs. Also, an observability UI to see data quality metrics in a single view and made it easier to schedule pipelines directly from the UI is added.

- Schedule Pipeline button – DLT lets you run ETL pipelines continuously or in triggered mode. Continuous pipelines process new data as it arrives and are useful in scenarios where data latency is critical. However, many customers choose to run DLT pipelines in triggered mode to control pipeline execution and costs more closely. To make it easy to trigger DLT pipelines on a recurring schedule with Databricks Jobs, a ‘Schedule’ button is added in the DLT UI to enable users to set up a recurring schedule with only a few clicks without leaving the DLT UI.

- Change Data Capture (CDC) –With DLT, data engineers can easily implement CDC with a new declarative APPLY CHANGES INTO API, in either SQL or Python. This new capability lets ETL pipelines easily detect source data changes and apply them to data sets throughout the lakehouse. DLT processes data changes into the Delta Lake incrementally, flagging records to insert, update, or delete when handling CDC events.

- CDC Slowly Changing Dimensions (Type 2) –When dealing with changing data (CDC), we often need to update records to keep track of the most recent data. SCD Type 2 is a way to apply updates to a target so that the original data is preserved. For example, if a user entity in the database changes their phone numbers, we can store all previous phone numbers for that user. DLT supports SCD type 2 for organizations that require maintaining an audit trail of changes. SCD2 retains a full history of values. When the value of an attribute changes, the current record is closed, a new record is created with the changed data values, and this new record becomes the current record.

- Enhanced Autoscaling (preview) –Sizing clusters manually for optimal performance given changing, unpredictable data volumes–as with streaming workloads– can be challenging and lead to overprovisioning. DLT employs an enhanced auto-scaling algorithm purpose-built for streaming. DLTs Enhanced Autoscaling optimizes cluster utilization while ensuring that overall end-to-end latency is minimized. It does this by detecting fluctuations of streaming workloads, including data waiting to be ingested, and provisioning the right number of resources needed (up to a user-specified limit). In addition, Enhanced Autoscaling will gracefully shut down clusters whenever utilization is low while guaranteeing the evacuation of all tasks to avoid impacting the pipeline. As a result, workloads using Enhanced Autoscaling save on costs because fewer infrastructure resources are used.

- Automated Upgrade & Release Channels –Delta Live Tables (DLT) clusters use a DLT runtime based on Databricks runtime (DBR). Databricks automatically upgrades the DLT runtime about every 1-2 months. DLT will automatically upgrade the DLT runtime without requiring end-user intervention and monitor pipeline health after the upgrade.

- Announcing Enzyme, a new optimization layer designed specifically to speed up the process of doing ETL – Transforming data to prepare it for downstream analysis is a prerequisite for most other workloads on the Databricks platform. While SQL and Data frames make it relatively easy for users to express their transformations, the input data constantly changes. This requires re-computation of the tables produced by ETL. Enzyme is a a new optimization layer for ETL. Enzyme efficiently keeps up to date a materialization of the results of a given query stored in a Delta table. It uses a cost model to choose between various techniques, including techniques used in traditional materialized views, delta-to-delta streaming, and manual ETL patterns commonly used by data engineers.

Learn more on –

https://databricks.com/blog/2022/06/29/top-5-workflows-announcements-at-data-ai-summit.html

New Delta Live Tables Capabilities and Performance Optimizations – The Databricks Blog

Neha, you make some excellent points. Even for those who weren’t able to attend the summit, this explanation can give them a sense of what it was like.

Thanks Neha for taking out key points from summit. Well Explained!

thanks for sharing Neha!!!