In this blog, we will explore how we can effectively utilize Langchain, Azure OpenAI Text embedding ADA model and Faiss Vector Store to build a private chatbot that can query a document uploaded from local storage. A private chatbot is a chatbot that can interact with you using natural language and provide you with information or services that are relevant to your needs and preferences. Unlike a public chatbot, a private chatbot does not rely on external data sources or APIs, but rather uses your own local document as the source of knowledge and content. This way, you can ensure that your chatbot is secure, personalized, and up to date

Why use Langchain, Azure OpenAI, and Faiss Vector Store?

Langchain, Azure OpenAI, and Faiss Vector Store are three powerful technologies that can help you build a private chatbot with ease and efficiency.

- Langchain is a Python library that allows you to create and run chatbot agents using a simple and intuitive syntax. Langchain provides you with various classes and methods that can handle the common tasks of chatbot development, such as loading text, splitting text, creating embeddings, storing embeddings, querying embeddings, generating responses, and defining chains of actions. Langchain also integrates with other popular libraries and services, such as Faiss, OpenAI, and Azure OpenAI Service, to enable you to leverage their functionalities within your chatbot agent.

- Azure OpenAI Service is a cloud-based service that allows you to access the powerful natural language processing capabilities of OpenAI, such as GPT-4, Codex, and DALL-E. Azure OpenAI Service enables you to encode text into embeddings, decode embeddings into text, and generate text based on a prompt or a context. You can use Azure OpenAI Service to create high-quality natural language responses for your chatbot, as well as to create embeddings for your local document that capture its semantic meaning.

- Faiss Vector Store is a vector database that allows you to store and retrieve embeddings efficiently and accurately. Faiss Vector Store uses a state-of-the-art algorithm called Product Quantization (PQ) to compress and index embeddings, which reduces the storage space and improves the search speed. You can use Faiss Vector Store to store the embeddings of your local document and to query them for the most relevant chunks based on the user input.

Build a Private Chatbot with Langchain, Azure OpenAI, and Faiss Vector Store

Now that you have an idea of what these technologies are and what they can do, let’s use them to build a private chatbot with Langchain, Azure OpenAI, and Faiss Vector Store for local document queries. The steps are as follows:

-

Step 1: Install Langchain and its Dependencies

You need to install Langchain and its dependencies, such as Faiss, OpenAI, and Azure OpenAI Service, on your machine. You also need to import the required libraries and modules for your chatbot.

-

Step 2: Load your Local Document

You need to load your local document using Langchain’s TextLoader class. You can use any text format, such as PDF, HTML, or plain text, as long as it is readable by Langchain. For example, you can load a PDF document using the PyPDFLoader class, and docx using Docx2txtLoader as shown in the following code snippet:

from langchain.document_loaders import PyPDFLoader from langchain.document_loaders import Docx2txtLoader //load PDF loader = PyPDFLoader(file_path=tmp_file_path) //load docx loader = Docx2txtLoader(file_path=tmp_file_path)

-

Step 3: Split your Document into Smaller Chunks

You need to split your document into smaller chunks using Langchain’s CharacterTextSplitter or SentenceTextSplitter classes. Below code snippet split your document into sentences using the SentenceTextSplitter class.

# Split the document into sentences using SentenceTextSplitter splitter = SentenceTextSplitter() chunks = splitter.split(document)

You can also use the load_and_split method of the loaders to split your document into chunks automatically, based on the file format and the structure of your document.

Below is the code snippet of PyPDFLoader to split your PDF document into pages:# Split the PDF document into pages using PyPDFLoader loader = PyPDFLoader() pages = loader.load_and_split("my_document.pdf", encoding="utf-8", language="en", title="My Document")Similarly, you can use Docx2txtLoader to split your DOCX document into paragraphs, as shown in the following code snippets:

# Split the DOCX document into paragraphs using Docx2txtLoader loader = Docx2txtLoader() paragraphs = loader.load_and_split("my_document.docx", encoding="utf-8", language="en", title="My Document") -

Step 4: Create Embeddings and Store them in a Faiss Vector Database

You can use the FAISS class to create a Faiss vector database from your local document, which will store the embeddings locally and allow you to query them later.

from langchain.embeddings.openai import OpenAIEmbeddings from langchain.vectorstores import FAISS embeddings=OpenAIEmbeddings(deployment=OPENAI_ADA_DEPLOYMENT_NAME, model=OPENAI_ADA _MODEL_NAME, openai_api_base=OPENAI_DEPLOYMENT_ENDPOINT, openai_api_type="azure", chunk_size=1) db = FAISS.from_documents(documents=pages, embedding=embeddings) db.save_local("./dbs/documentation/faiss_index") -

Step 5: Create a Chatbot Agent

You need to create a chatbot agent using Langchain’s OpenAIAgent class. This class allows you to interact with the Azure OpenAI Service and generate natural language responses based on the user input and the retrieved chunks. You can choose the model and the parameters of the Azure OpenAI Service according to your preference. You also need to create an instance of the AzureChatOpenAI class for your chatbot, as shown in the following code snippet:

from langchain.chat_models import AzureChatOpenAI llm = AzureChatOpenAI(deployment_name=OPENAI_DEPLOYMENT_NAME, model_name=OPENAI_MODEL_NAME, openai_api_base=OPENAI_DEPLOYMENT_ENDPOINT, openai_api_version=OPENAI_DEPLOYMENT_VERSION, openai_api_key=OPENAI_API_KEY, openai_api_type="azure") -

Step 7: Define a Chain of Actions for your Chatbot Agent

You need to define a chain of actions for your chatbot agent using Langchain’s Chain class. A chain is a sequence of calls that can be executed by the agent to perform a specific task. For example, you can define a chain that takes the user input, queries the Faiss vector database for the most relevant chunks, and generates a response using the Azure OpenAI Service.

Use ConversationalRetrievalChain from azure-openai to create a chatbot agent that can answer questions using Azure OpenAI Service models and the local document retriever.from langchain.vectorstores import FAISS from langchain.chains import ConversationalRetrievalChain from langchain.chains.question_answering import load_qa_chain #load the faiss vector store we saved locally vectorStore = FAISS.load_local("./dbs/documentation/faiss_index", embeddings) #use the faiss vector store we saved to search the local document retriever = vectorStore.as_retriever(search_type="similarity", search_kwargs={"k":2}) qa = ConversationalRetrievalChain.from_llm(llm=llm, retriever=retriever, condense_question_prompt=QUESTION_PROMPT, return_source_documents=True, verbose=False) -

Step 8: Run your Chatbot Agent

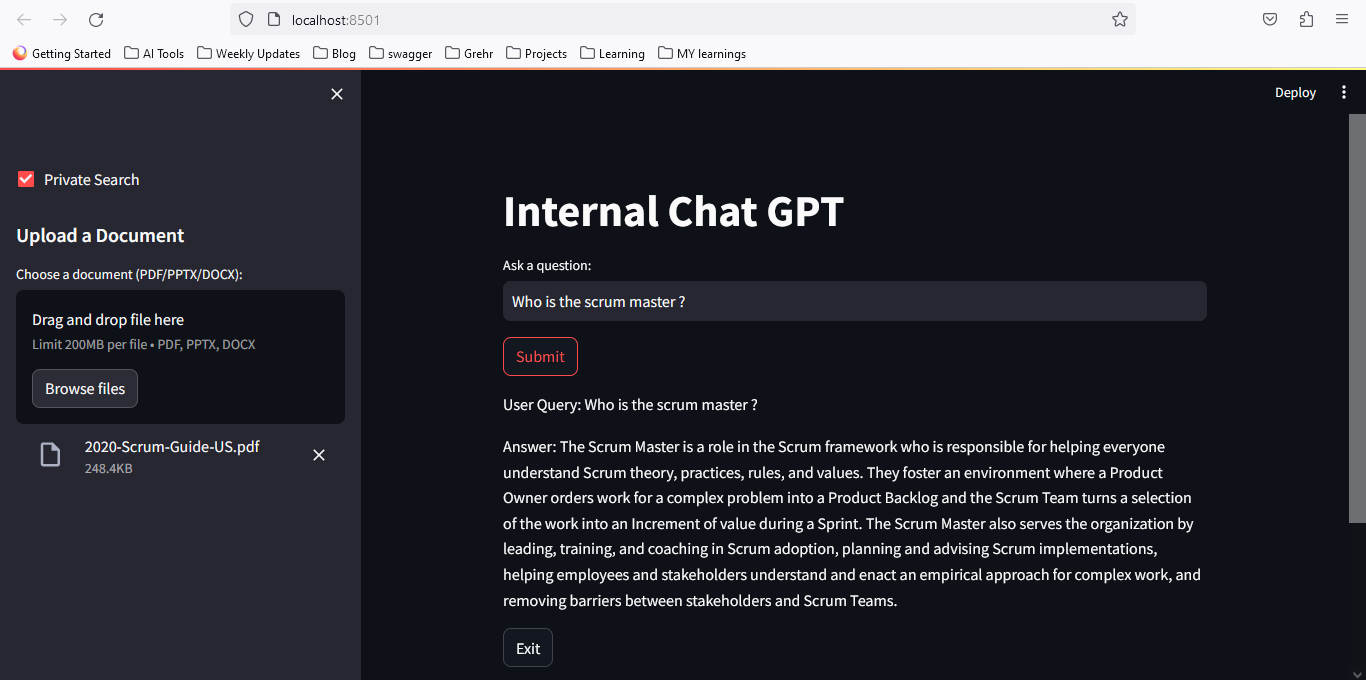

You can use the Streamlit library to create a web-based interface for your chatbot, as shown in the following code snippet:

# Process user query and get response def ask_question_with_context(qa, question, chat_history): result = qa({"question": question, "chat_history": chat_history}) chat_history.append((question, result["answer"])) return chat_history user_query = st.text_input("Ask a question:") if st.button("Submit"): if user_query: st.write("User Query:", user_query) chat_history = ask_question_with_context(qa, user_query, chat_history) response = chat_history[-1][1] if chat_history else "No response" st.write("Answer:", response)

What are the Benefits of Using a Private Chatbot with Langchain, Azure OpenAI, and Faiss Vector Store?

By using a private chatbot with Langchain, Azure OpenAI, and Faiss Vector Store for local document query, you can achieve the following benefits:

- Security: You can keep your local document private and secure, as you do not need to upload it to any external server or service. You can also control the access and usage of your chatbot, as you do not need to share it with anyone else.

- Personalization: You can customize your chatbot according to your needs and preferences, as you can choose the text format, the chunk size, the embedding model, the index type, the generation model, and the chain of actions for your chatbot.

- Real-Time Updates: You can ensure that your chatbot is always up-to-date, as you can update your local document and your chatbot whenever you want. You can also leverage the latest natural language processing technologies, such as OpenAI, to create high-quality natural language responses for your chatbot.

How to Use Your Private Chatbot with Langchain, Azure OpenAI, and Faiss Vector Store?

Once you have built your private chatbot with Langchain, Azure OpenAI, and Faiss Vector Store for local document query, you can use it for various purposes, such as:

- Learning: You can use your chatbot to learn new information or skills from your local document, such as a textbook, a manual, or a tutorial. You can ask your chatbot questions, request summaries, or request examples from your local document.

- Researching: You can use your chatbot to research a topic or a problem from your local document, such as a paper, a report, or a case study. You can ask your chatbot to provide you with relevant facts, arguments, or evidence from your local document.

- Creating: You can use your chatbot to create new content or products from your local document, such as a blog, a presentation, or a prototype. You can ask your chatbot to generate ideas, suggestions, or solutions from your local document.

Conclusion

In this blog, we have learned how to build a private chatbot with Langchain, Azure OpenAI, and Faiss Vector Store for local document query. The integration of these technologies enables the development of a secure and personalized private chatbot. This approach offers enhanced security, personalization, and access to local document knowledge. By following the provided steps, you can create a chatbot tailored to your needs, ensuring privacy and control over your data. This technology stack holds great potential for various applications, including learning, research, and content creation.

Thank you for reading!

Additional Resources

- https://docs.pinecone.io/docs/langchain

- https://learn.microsoft.com/en-us/azure/ai-services/openai/tutorials/embeddings

- https://github.com/langchain-ai/langchain/discussions/9818

- https://python.langchain.com/docs/integrations/document_loaders

- https://python.langchain.com/docs/integrations/vectorstores/faiss