If you’re in technology, AIs are everywhere nowadays. At work, I use an AI to help me make proof of concepts, sometimes in communicating with other AIs. At home, I use Google’s Bard to help with those day-to-day mental chores such as meal planning. Even at play, when indulging in some Gran Turismo there’s an AI to race against. I can’t do anything about the AIs I use more casually (aside from being aware of them). At work though, I have some control! When interfacing with an AI through an API, you’re allowed to tweak parameters or try the same query with different contexts before getting a response from your AI. And what better to help judge your user engagement with an AI than Optimizely’s Feature Experimentation!

OpenAI API

Here I’ll be working with OpenAI’s API along with their client package from nuget to simplify some rather ugly setups. I’ll include an HttpClient version of the call at the end of the post so you can see the details involved.

In this demo, I’ll be switching the AI model that my application uses, but this approach can be used on any of the parameters sent to the AI. Your basic OpenAI API call follows this format:

client = new OpenAIAPI(apiKey);

var parameters = new CompletionRequest

{

Model = "gpt-3_5-turbo",

Prompt = promptText,

Temperature = 0.7,

MaxTokens = 100

};

var response = await client.Completions.CreateCompletionAsync(parameters);

generatedText = response.Completions[0].Text;

This should look familiar to any developer who’s used a client wrapper for an API. The connection details are handled by the client, but the business end of the request is the CompletionRequest parameters. Here we’re able to select the AI model we want to query against, as well as supply the context/prompt, as well as other details relevant to the AI setup.

But what if we aren’t sure that the model is the best fit for our purposes? Wouldn’t it be nice to try out a couple different models and track metrics on them? Here’s Feature Experimentation to help with that!

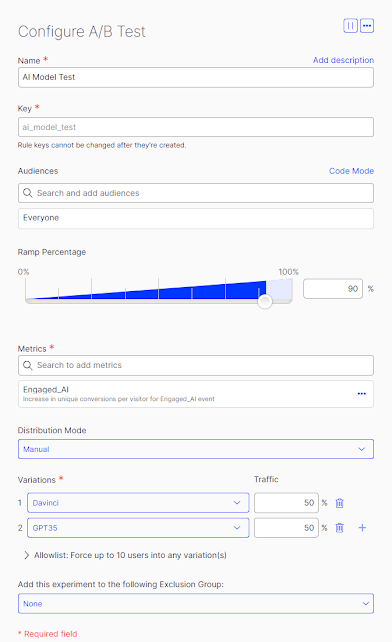

What I’ve done is set up an experiment that either sends the user’s query to ChatGPT 3.5 or the Davinci model:

From there, we configure the Optimizely client back in our solution:

optimizely = OptimizelyFactory.NewDefaultInstance(Configuration.GetValue<string>("Optimizely:SdkKey"));

user = optimizely.CreateUserContext(numGen.Next().ToString());

user.TrackEvent("Engaged_AI");

var decision = user.Decide("ai_model");

if (decision.Enabled)

{

var ai_model = decision.VariationKey;

var parameters = new CompletionRequest

{

Model = ai_model,

Prompt = promptText,

Temperature = 0.7,

MaxTokens = 100

};

var response = await client.Completions.CreateCompletionAsync(parameters);

generatedText = response.Completions[0].Text;

}

else{

generatedText = "AI is not enabled for this user";

}

Here is also where you can add more context to the prompt to give the AI a better understanding of the query. This could help your AI understand its starting point, such as “you are a customer service agent for company A.”

Now as you introduce AI into your applications, you can see how both Feature Flagging and Feature Experimentation can help you evaluate your approach to AI.