Docker is an open-source project that has changed how we think about deploying applications to servers. By leveraging some amazing resource isolation features of the Linux kernel, Docker makes it possible to easily isolate server applications into containers, control resource allocation, and design simpler deployment pipelines. Moreover, Docker enables all of this without the additional overhead of full-fledged virtual machines.

Let’s see how Docker works.

Applications running in virtual machines, apart from the hypervisor, require a full instance of the operating system and any supporting libraries. Containers, however, use shared operating systems. This means they are much more efficient than hypervisors in system resource terms. Instead of virtualizing hardware, containers rest on a single Linux instance. This means you can leave behind the useless 99.9 percent VM junk, leaving you with a small, neat capsule containing your application. The essential difference is that the processes running inside the containers are like the native processes on the host and do not introduce any overheads associated with hypervisor execution. Additionally, applications can reuse the libraries and share the data between containers.

Therefore, with a perfectly tuned container system, you can have as many as four-to-six times the number of server application instances as you can on the same hardware. Another reason why containers are popular is they lend themselves to Continuous Integration/Continuous Deployment.

Now, after a quick glance at how Docker works, let’s understand Docker Architecture.

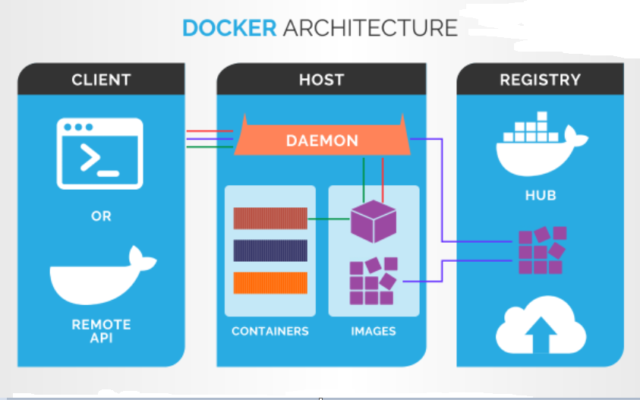

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API over UNIX sockets or a network interface. Another Docker client is Docker Compose, which lets you work with applications consisting of a set of containers.

Docker follows Client-Server architecture, which includes the three main components: Docker Client, Docker Host, and Docker Registry.

Docker’s Client

Docker API is used by Docker commands. Docker users can interact with Docker through a client. When any Docker commands runs, the client sends them to the Docker daemon, which carries them out. Docker clients can communicate with more than one daemon.

Docker Host

The Docker host provides a complete environment to execute and run applications. It comprises the Docker daemon, Images, Containers, Networks, and Storage. As previously mentioned, the daemon is responsible for all container-related actions and receives commands via the CLI or the REST API. It can also communicate with other daemons to manage its services.

Docker’s Registry

Docker registries are services that provide locations from where you can store and download images. In other words, a Docker registry contains Docker repositories that host one or more Docker Images. You can also use Private Registries. Public Registries include two components, namely the Docker Hub and Docker Cloud. The most common commands when working with registries include docker push, docker pull, and docker run.

Installing Docker

Docker runs natively on Linux, so depending on the target distribution, it could be as easy as sudo apt-get install docker.io. As the Docker daemon uses Linux-specific kernel features, it isn’t possible to run Docker natively in Mac OS or Windows. Instead, you should install an application called Boot2Docker. The application consists of a VirtualBox Virtual Machine, Docker itself, and the Boot2Docker management utilities. You can follow the official installation instructions for macOS and Windows to install Docker on these platforms.

How can Docker help you in your Project?

When your development team changes, Introducing new developers to the project always takes time. Before they can start coding, they must set up their local development environment for the project – e.g., some local server, database, or third-party libraries. This may take a few hours to many days, depending on the project complexity and how well the project setup manual is. Docker automates this setup & installation work so new developers can be productive from the first day. Instead of doing everything manually, they simply run one command that will prepare the development environment for them. This saves a lot of time, and the bigger your development team and higher rotation, the more you will gain by using Docker.

When your software runs in different environments, you can notice inconsistent app behavior between the machines it runs on. Something on a developer’s computer may not work on the server. And the number of issues grows with every new environment – like another server (test server vs. production server) or another developer’s computer with a different operating system. Docker allows you to run the software in containers separated from the outside world, so your app can work consistently in every environment.

When your software grows and consists of many bits and pieces, when Developers add new libraries, services, and other dependencies to the software daily. The more complex your software becomes, the harder it is to keep track of all the parts required for it to run. Without Docker, all the project setup changes must be communicated to other developers and documented. Otherwise, their code version will stop working, and they won’t know why. With Docker, all the required components of the software are specified in Docker configuration files (like Dockerfile and docker-compose.yml).

When you want your software to be scalable and handle more users. Docker won’t automatically make your app scalable but can help with this. Docker containers can be launched in many copies that may run in parallel. So the more users you have, the more containers you launch (e.g., in the cloud). Before you do so, your software must be prepared for running multiple instances simultaneously. But if the scalability blockers are already removed, Docker containers will come in handy to launch a scalable application.

When your hosting infrastructure changes and you don’t want to be locked. Small websites and applications don’t require complex hosting infrastructure. But when a business grows and evolves, so do the server requirements. In the fast-paced business environment, web infrastructure must be flexible enough to adapt quickly. Both ensure that your website won’t crash and that the costs of the infrastructure correspond to the actual needs. And because Docker containers are unified and very well adopted, they can be launched in almost any server environment.

Conclusion

We took an introductory look at what Docker is, its working and how it can be beneficial to your Project.

If you enjoyed this and thought it was informative ,do share with your colleagues . Also let me know in the comment section on what should be the topic for my upcoming blog.

Very well Explained. Great work

Nicely explained. I was just wondering like if a website is broken in microservices, where does the authentication occurs, it it at every docker microservice or a common microservice which will then redirect the authenticated user to particular resource. Kindly share thoughts.